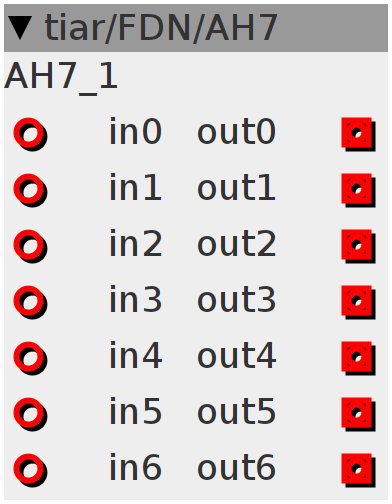

AH7

7x7 Almost Hadamard matrix

Inlets

frac32buffer in0

frac32buffer in1

frac32buffer in2

frac32buffer in3

frac32buffer in4

frac32buffer in5

frac32buffer in6

Outlets

frac32buffer out0

frac32buffer out1

frac32buffer out2

frac32buffer out3

frac32buffer out4

frac32buffer out5

frac32buffer out6

// A=-0,26120387496374144251476820691706

// B = 0,4459029062228060818860761551878

// truncated Hadamard form

// 0 1 2 3 4 5 6

// 0 B A B A B A B

// 1 A B B A A B B

// 2 B B A A B B A

// 3 A A A B B B B

// 4 B A B B A B A

// 5 A B B B B A A

// 6 B B A B A A B

// 14* 35+

void AH7(int32_t x0, int32_t x1, int32_t x2, int32_t x3, int32_t x4, int32_t x5,

int32_t x6, int32_t &y0, int32_t &y1, int32_t &y2, int32_t &y3,

int32_t &y4, int32_t &y5, int32_t &y6) {

int32_t A = -1121862100;

int32_t B = 1915138399;

y0 = ___SMMLA(x1 + x3 + x5, A, ___SMMUL(x0 + x2 + x4 + x6, B));

y1 = ___SMMLA(x0 + x3 + x4, A, ___SMMUL(x1 + x2 + x5 + x6, B));

y2 = ___SMMLA(x2 + x3 + x6, A, ___SMMUL(x0 + x1 + x4 + x5, B));

y3 = ___SMMLA(x0 + x1 + x2, A, ___SMMUL(x3 + x4 + x5 + x6, B));

y4 = ___SMMLA(x1 + x4 + x6, A, ___SMMUL(x0 + x2 + x3 + x5, B));

y5 = ___SMMLA(x0 + x5 + x6, A, ___SMMUL(x1 + x2 + x3 + x4, B));

y6 = ___SMMLA(x2 + x4 + x5, A, ___SMMUL(x0 + x1 + x3 + x6, B));

}AH7(inlet_in0, inlet_in1, inlet_in2, inlet_in3, inlet_in4, inlet_in5, inlet_in6,

outlet_out0, outlet_out1, outlet_out2, outlet_out3, outlet_out4,

outlet_out5, outlet_out6);